If you are not from IT world, it can get difficult to understand what are the IT systems and how they work. For the past couple of years I’ve been trying to wrap my head around the complexity of the IT systems and one of the things that helped me was to fitting it into simpler concepts.

Essentially, all IT systems must solve some sort of problem and deliver some value to users. They provide some input and expect something in return. What is happening in-between is the job of IT systems.

Personally, I like to break it down to a single simple concept:

In it’s essence, there are 3 parts – Input, IT system, Output.

- User provides some sort of input, for example – requests to see contents of a website;

- Then the request goes into the IT system (Black Box) – a chunk of code that is hosted on some physical hardware (even cloud is hosted somewhere physical);

- After the code is executed, user receives an output – sees a content of a webpage;

Depending on the task that the IT system is built to perform, the complexity can vary. At this level of abstraction, for me it makes sense to think of systems in high level.

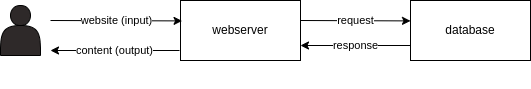

If a user wishes to visit a website, he puts in a web address, which gets routed via internet to a web server, which queries a database to fetch website data, witch returns the user with website content.

A simple website is easy to visualize and understand, however real world IT systems include many more components – load balancers, rate limiters, messaging queues, microservices, database replications, content delivery networks and others.

By having in mind this simple input-output concept, it’s much easier to grasp the IT system complexity.

To put this into further use – we can measure the Inputs, Outputs and in-between.

By tracking how many requests goes into the IT system, based on it’s intended behavior you can expect a certain amount of output. If the dynamic of the input/output changes, you can make some insights about the system performance. For example, if it takes longer to process inputs before outputs are produced, you can suspect a performance degradation, or if there is no output at all – detect system outage.

Measuring inputs and outputs not only let you understand how your product is performing, but it helps to detect inevitable system failures, performance degradation, incidents, understand user behavior as well.

This concept is fundamental for understanding IT systems (at least for me), which leads to better understanding of their performance and user behavior.